Loading component...

At a glance

By Lisa Uhlman

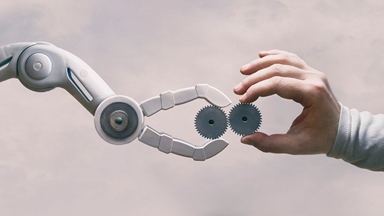

As businesses embrace artificial intelligence (AI) for its promise of productivity and efficiency gains, they risk worsening a “trust gap” with their clients. Building trust through AI begins with transparency in how companies implement — and communicate about — the technology, experts say.

A recent study by Vodafone Business and the London School of Economics and Political Science highlights how technology can exacerbate the trust problem. According to the study report, there is a large difference between how much businesses think their customers trust them and how customers feel.

The study also highlights room for improvement, showing that businesses using chatbots, AI and Gen AI to deliver faster response times could see a 16 per cent jump in trust scores by deploying the technology ethically and for customers’ benefit.

Be transparent in organisational AI use

It is crucial that businesses, particularly in professional services and other sectors with high data-privacy concerns, be clear and up-front about their use of the technology — including why it is necessary in the first place, and the benefits and risks it may involve for clients.

Dr Simon Longstaff AO FCPA, executive director of The Ethics Centre, says businesses should “think at a larger level about the purposes for which they are using AI” and whether they are legitimate, justifiable and sensible.

Not only is trust a “real problem” for businesses generally, says Mary-Anne Williams, a professor at UNSW Business School and founder of the UNSW Business AI Lab, but helping customers and users calibrate trust is a challenge.

“We need to be sure that AI systems are working in the interests of the user or the customer,” she adds. “Communicating what the AI’s purpose is and what value it can create safely is critical for building and maintaining trust.”

A key challenge is the “strong heterogeneity of customers and clients in terms of their knowledge of AI and skills interacting with it”, says Sam Kirshner, an associate professor at UNSW Business School who studies how algorithms and AI affect decision-making.

Clients’ response to a company’s use of AI will thus be “largely driven by what they think of that organisation, as well as what they think of AI more generally”, he adds.

“When presenting AI to clients, I would try to think in terms of these levels of detail: how they would connect to the audience, but also whether the audience is more likely to be mathematically oriented, more visual or more verbal,” he says.

What happens when AI makes a mistake?

Following responsible AI principles, including explainability, transparency and contestability, helps engender client trust by providing some insight into decision-making processes and outcomes, according to Williams.

Moreover, interactions and decisions involving AI have secondary and tertiary effects that organisations must also consider. Again, it is helpful to understand AI’s limitations.

“People usually want AI to explain its adverse decisions to ensure [a result] is not a mistake or gain insights into how they might get a better result next time, which requires transparency,” Williams says.

“It is essential that customers and users know neural net-type AI systems are considered to be ‘black boxes’, which means they lack transparency, and it is difficult to ensure accountability.”

Kirshner sees accountability and contestability, rather than transparency, as the most important for client trust, because most people will not think about these issues until something goes wrong.

“Is there some avenue for recourse? Will someone actually be held accountable?” he says. “If I were to wave a magic wand to make every company abide by these fairness principles, I would say those two are more important than transparency and explainability.”

How to build client trust in AI

Companies must meet two conditions to build trust in their AI use. The first is specifying the standard for judgment on which decision-making will be based, explains Longstaff. Businesses “need to be able to say to clients: these are the core values and principles that have been built into the design of our AI, from the level of coding through to its application”, he says.

The second condition, Longstaff continues, is that “clients need to have their own experience of its use to match those expectations and be judged against the standards you’ve declared up-front to be the appropriate basis.”

Kirshner says it is important that companies also communicate where they have humans in the loop.

“Ultimately, I think people will want to understand: is it totally autonomous or are there people? How big are the decisions being made?” he says.

“That can also help bridge this trust gap — particularly if it’s business-to-business, where there is a more sophisticated understanding of how the AI is working.”