Loading component...

At a glance

- Machine teaching builds tools that people can use to train artificial intelligence on their own.

- Risks of error and bias are presented when a machine’s decisions are informed by an individual rather than a mass of data.

- An ethical framework is required to ensure AI is designed in thoughtful and responsible ways.

The argument about whether robots will take our jobs has moved, and is now focusing on how artificial intelligence (AI) will free us up to conduct higher-value work. When HR professionals no longer waste time chasing annual leave forms, for instance, they can become more deeply involved in strategic matters. When the task of transferring figures from the journal to the ledger is removed, accountants can become true business partners. That’s the dream, and so far it has begun to come true.

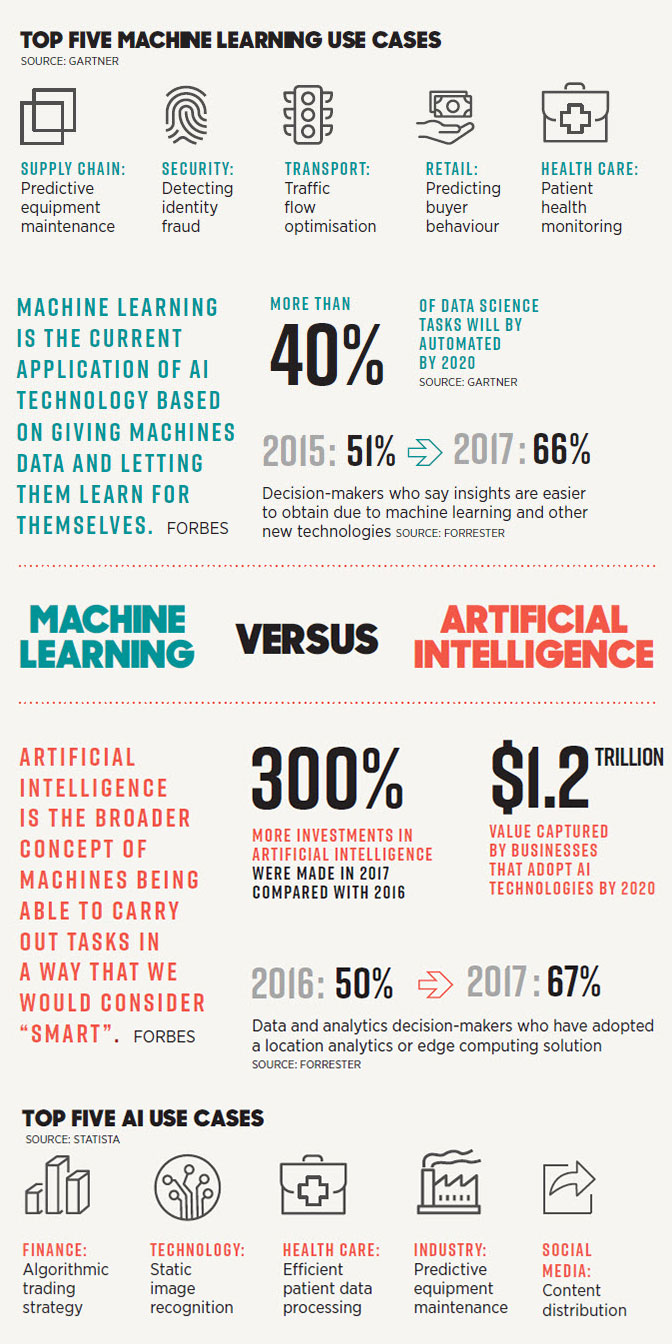

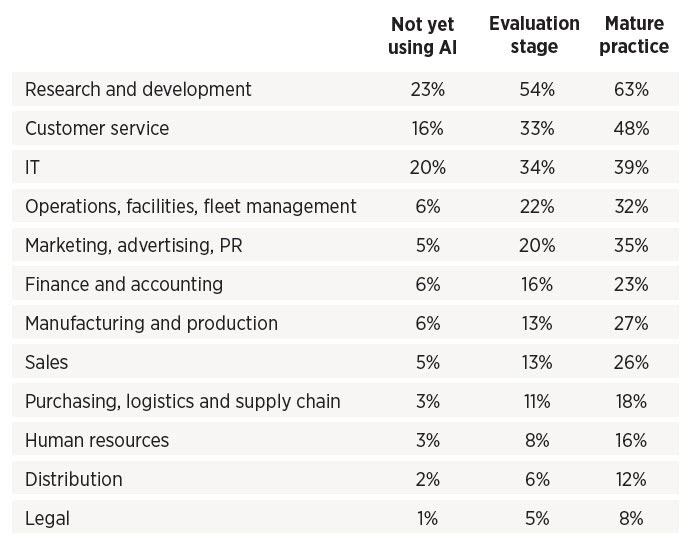

However, continuous advancement in automation, AI and machine learning requires massive data science talent. As organisations globally engage in a battle for that talent, there’s simply not enough to go around.

One of the intended solutions to the talent squeeze is the development of machine learning capabilities that require no data science knowledge – software tools similar to word processing packages or spreadsheet solutions that are built for the everyday worker. This new subset of machine learning is known as machine teaching.

What is machine teaching? Dr Ali Akbari, senior artificial intelligence lead at Unisys APAC, explains:

“So far, when we have been talking about machine learning, the focus has been on algorithms and models and how we can generate models to deliver the target that we want,” he says. “This means that if a company, large or small, wants to utilise machine learning, they need to employ data scientists and spend a lot of time and money.

"If we are training and teaching these algorithms using data that is not complete or that is biased, then we are creating a machine learning tool that won’t make a fair decision.”

“Machine teaching is about simplifying things by building multi-purpose tools that can be given to business users. Then the focus is on how to teach users to do it well, rather than how to develop the algorithm.”

Microsoft is betting on the new technology, having purchased machine teaching start-up Bonsai, and establishing an internal Machine Teaching Group.

“Traditional machine learning requires volumes of labelled data that can be time consuming and expensive to produce,” the Machine Teaching Group website says. “Machine teaching leverages the human capability to decompose and explain concepts to train machine learning models, which is much more efficient than using labels alone. With the human teacher and the machine learning model working together in a real-time interactive process, we can dramatically speed up model-building time.”

Patrice Simard, research manager with Microsoft’s machine teaching innovation team, makes bold claims about the potential of the technology. “Any task that you can teach to another human, you should be able to teach to a machine,” he says.

Some experts would beg to differ. Professor Michael Davern CPA, chair of accounting and business information systems in the Faculty of Business and Economics at the University of Melbourne, describes such a claim as “marketing spin”.

“This is not going to allow machines to automatically carry out expert tasks,” says Davern, also a professor (honorary) in computing and information systems in the university’s Melbourne School of Engineering. “The focus is still on routine data-processing tasks. What we’re seeing is simply the next level of automation.”

Machine teaching, Davern says, is about engaging a human expert in order to speed up the machine learning process or to make it more efficient.

“For example, give a machine 10,000 sales transactions and it can classify them and identify unusual transactions,” he explains. “But, the machine can’t interpret the results. It takes human expertise to determine the business implications, if there are any. Machine teaching doesn’t replace the expert, rather it uses experts to prime the machine to focus on business-relevant differences in the transactions from the outset. It ultimately means you need less training data and can get more meaningful results, but it isn’t a revolutionary change.”

Democratising AI: all about the individual

The purpose of machine teaching tools is to make the technology simple enough for anybody to use.

“It is automating the work of data scientists,” Akbari says. “But it doesn’t mean putting them out of a job. It’s just changing the way we work. If the repetitive parts of a data scientist’s job are automated, we can focus more on the creative parts.”

This means data scientists are finally automating their own jobs, but what about the person who is using the machine, the individual who is teaching it to do their job?

Is there a risk for them? Once again, the intention of the software is simply to make people’s jobs easier, Akbari says. It is about further introducing AI to the workplace and allowing people to focus on other aspects of their work.

There is, of course, an increased risk of error when some of the machine’s decisions are being informed through feedback from a single individual, as opposed to a mass of data. However, this is being considered, Akbari says.

“If we are training and teaching these algorithms using data that is not complete or that is biased, then we are creating a machine learning tool that won’t make a fair decision.

“It involves having a view that your users are going to span the spectrum, and creating a system that allows for that.”

That can happen, and we need to be very careful and aware of this. It starts from defining and having ways to identify these biased or incorrect decisions, then making sure we provide enough diverse data and diversity of people in designing those algorithms.”

Mitigating the risk of bad decisions resulting from the input of a single user requires users to be trained in cultural awareness, Akbari says.

“Let’s say we create a multi-purpose fraud detection tool, and we tell a user to feed it with past examples of fraudulent transactions,” he explains. “If we don’t give them a cultural awareness around how this technology works, based on the availability they might just input examples of fraudulent transactions that mostly occurred between 10am and midday in Sydney. That will result in the machine creating a model that unfairly penalises transactions made during that time in Sydney.

“Teaching people to use these tools properly will be very important … It’s important to make sure the people using this tool realise that it is a double-sided knife. It has strengths and it has weaknesses, so they need to be careful.”

Machine teaching and ethics

AI, machine learning and machine teaching are undeniably powerful offerings for the future of human society – that fact is universally accepted. However, with this power comes the risk of mismanagement.

“AI will provide great opportunity and great potential,” says Robert Wickham, Salesforce’s vice president and growth program manager, Asia-Pacific region. “At the same time, you have to take an ethical approach to ensure you’re designing for the outcomes that you want and in a thoughtful, responsible way.”

There are four dimensions to ensuring that the ethical, human side of AI is properly governed, Wickham says. These apply equally to those developing and using the software.

The first is accountability, meaning that while the production of an AI system focuses on building capabilities, it is always with a view to being accountable to customers and to society.

This comes partly from continually striving to find ways to bring independent feedback and diverse voices into the process. A lack of diversity creates serious operational problems for any AI system.

Facial recognition software, for instance, famously went through a period where it had problems recognising black faces. Many say this is because it was developed by white engineers and fed training data that comprised almost exclusively white faces.

“The second is that the process must be transparent,” Wickham says. “Part of where AI has presented challenges in the past is with ‘black box algorithms’ where you see an outcome, but you’re unable to explain the process by which the algorithms came to those outcomes.

“Unless they’re transparent, it’s really problematic. When you extrapolate that to a world where these algorithms are being used to drive processes and procedures that impact entire markets and lives of many, many people, that transparency is very important.”

Third is the fact that AI must be developed to augment the human condition, rather than to replace the human condition.

“Finally, ethics in AI is about being inclusive,” Wickham says. “This speaks not only to diversity, but also to creating something that is not focused on the creators, but is instead focused on the user. It involves having a view that your users are going to span the spectrum, and creating a system that allows for that.”

How is this all implemented on a practical, organisational level? At Salesforce, Wickham says, there is now a chief ethics officer and an advisory council on ethics, which means the question of ethics will always be on the C-level agenda.

“Outside of this organisation, I’m confident that these matters are being discussed at the right levels,” he says. “At Davos [World Economic Forum] last year, for instance, the ethical use of technology, including AI, was a key topic. In Australia, our organisations are beginning to ask whether we have yet got it right, how we can elevate the conversation to the right level and whether we are making sure ethics is part of the social conversation around AI. We are also seeing governments begin to collaborate with private companies to think through the required regulations.”

This will increasingly become an essential conversation as businesses of all shapes and sizes bring machine teaching software on board to look after various routine tasks.