Loading component...

At a glance

- Standard-setters are consulting on proposed uses of AI and potential consequences if no AI standards are set.

- Accountants and auditors may become “guardians”, acting to intervene when an AI program makes bad decisions.

By Jan Begg

Artificial intelligence (AI) is fast becoming important for accountants and businesses, and how it is used raises several ethical issues and questions.

While autonomous AI algorithms teach themselves, concerns have been raised that some machine learning techniques are essentially “black boxes” that make it technically impossible to fully understand how the machine arrived at a result. It will become increasingly important to develop AI algorithms that are transparent to inspection, auditable, secure and robust against manipulation and misuse.

For accountants, business and the wider community to realise AI’s potential significant benefits, it is vital to have trust in AI applications. The Australian Government’s Department of Industry, Innovation and Science funded the development of a discussion paper titled Artificial Intelligence: Australia’s Ethics Framework in 2019.

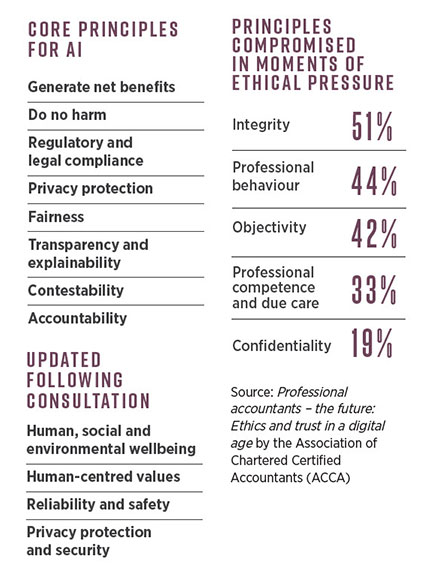

This paper, with input from the Commonwealth Scientific and Industrial Research Organisation (CSIRO), Data61 and universities, proposed eight core principles for AI and a nine-factor toolkit for ethical AI.

The framework explores the challenges of understanding the algorithms and decision-making inside the “black box”. A key message is the challenge to update and apply existing laws such as anti-discrimination laws and privacy laws to AI and the machine learning life cycle.

One of the most significant ethical questions in the context of AI is that of responsibility and accountability. Who is to blame if the AI-powered machine fails to execute its assigned tasks or causes physical or economic harm in executing tasks? How can AI algorithms be programmed to ensure they operate in an ethically correct and acceptable way?

Data and analysis are at the heart of our digital future and feed machine learning. Increasingly, the holders, creators and users of data are being asked to share.

Such requests raise ethical questions, as well as legal ones. Does data sharing comply with existing data sharing laws, such as Australia’s Privacy Act, especially Australian Privacy Principle 11 (security of personal information) and the Protective Security Policy Framework (classified government information).

The European Union (EU) General Data Protection Regulation (GDPR) is also an important consideration for many businesses wishing to use or share data, including businesses outside the EU. In particular, the GDPR includes the right to be forgotten, which requires organisations with data operations in the EU to allow people to request the removal of personal information held on them. Requirements extend to system design, including AI algorithms.

Standards Australia has begun a consultation on:

- Opportunities for AI adoption

- Australia’s use of AI to enhance competitive advantage

- Potential consequences if no AI standards are set

Telstra, Microsoft, National Australia Bank, Commonwealth Bank and technology company Flamingo AI will trial the updated principles.

CPA Library resource:

International initiatives

The Organisation for Economic Co-operation and Development (OECD) has AI principles with core concepts of the technology being robust, safe, fair and trustworthy. These international initiatives encourage certification systems. However, as most are based on principles, the implementation is difficult to assess.

The principles state that automated decision systems cannot be the sole decision-maker, including when the decision has legal ramifications. Article 22 of the OECD AI principles states: “The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her”.

The World Economic Forum’s (WEF) white paper AI Governance – A Holistic Approach to Implement Ethics into AI suggests the following approaches to implementing ethics in AI:

- Bottom-up approach: this approach implies that machines, by observing human behaviour over time, will learn how to make ethical decisions on that basis.

- Top-down approach: ethical principles are programmed into artificially intelligent machines, so that the machine reacts accordingly in situations where it is required to make an ethical decision.

- Dogmatic approach: allows for the programming of specific ethical schools of thought, instead of possible scenarios.

It appears that the top-down approach may be the most suitable. Bottom-up approaches may see machines adopt and execute what is common, but maybe not ethical, behaviour.

It is questionable whether the dogmatic approach is feasible, as there is no right or wrong ethical school.

Programming such an algorithm would likely have to take into account several different schools of thought – potentially with contrasting or contradictory rules.

The OECD, WEF and others agree that we must remain in control of AI systems. Members must be satisfied that sufficient controls and safeguards are available when using AI-powered tools and/or software to reduce the threats to the fundamental principles to an acceptable level.

In a world where decisions are increasingly being made autonomously by AI-driven machines, a monitoring process must be put in place that has the capability to intervene when – or preferably before – things go wrong.

The WEF mentions a “guardian AI” that intervenes when the AI system makes unethical decisions. The AI guardian, potentially a human and machine hybrid, could control the AI algorithm’s compliance with predetermined ethical rules and, if required, report unethical behaviour to the relevant enforcement agency. It is possible that accountants and auditors could become that AI guardian.

While AI presents opportunities to accountants with respect to engagement, performance and efficiency, CPA Australia members must be aware of their professional obligations under accounting professional and ethical standards. APES 110, Code of Ethics for Professional Accountants, provides a framework to use when considering ethical issues arising from the use of AI.

Members must be satisfied that sufficient controls and safeguards are available when using AI-powered tools and/or software to reduce the threats to the fundamental principles to an acceptable level. Recent research into the impact of AI on the fundamental principles demonstrated that the principles most at risk were integrity, professional behaviour and objectivity.